收集跨多行日志,我们需要用到codec的multiline插件来实现,它可以将多行进行合并加入单个事件,例如收集Java exception就可以用到它。

官方文档:https://www.elastic.co/guide/en/logstash/current/plugins-codecs-multiline.html

配置看起来像这样:

1

|

input { stdin { codec => multiline { pattern => "pattern, a regexp" negate => "true" or "false" what => "previous" or "next" } } } 注: pattern:必须的设置,要匹配的正则表达式。 what:必须的设置,可选值(previous,next)。如果模式匹配,则事件是属于下一个事件还是上一个事件,即指定将匹配到的行与前面的行合并还是和后面的行合并 negate:可选值(false,true)。true表示不匹配模式的内容将成为匹配项,被what应用,即取反。false为默认值。

|

例如,Java日志是多行的,通常一条日志是从最左端开始,后面每行会缩进。我们可以这样去匹配,这表示任何以空格开头的行都属于前一行:

1

|

input { stdin { codec => multiline { pattern => "^\s" what => "previous" } } }

|

下面这个示例是合并不是以日期开头的行到前一行,这表示任何不以时间戳开头的行都应与前一行进行合并:

1

|

input { file { path => "/var/log/someapp.log" codec => multiline { pattern => "^%{TIMESTAMP_ISO8601} " negate => true what => "previous" } } }

|

下面我们来看下下面这个实例——Elasticsearch日志信息的收集。

以下是Elasearch日志中一些正常输出信息和报错信息,这里进行一些精简作为测试的对象:

1

|

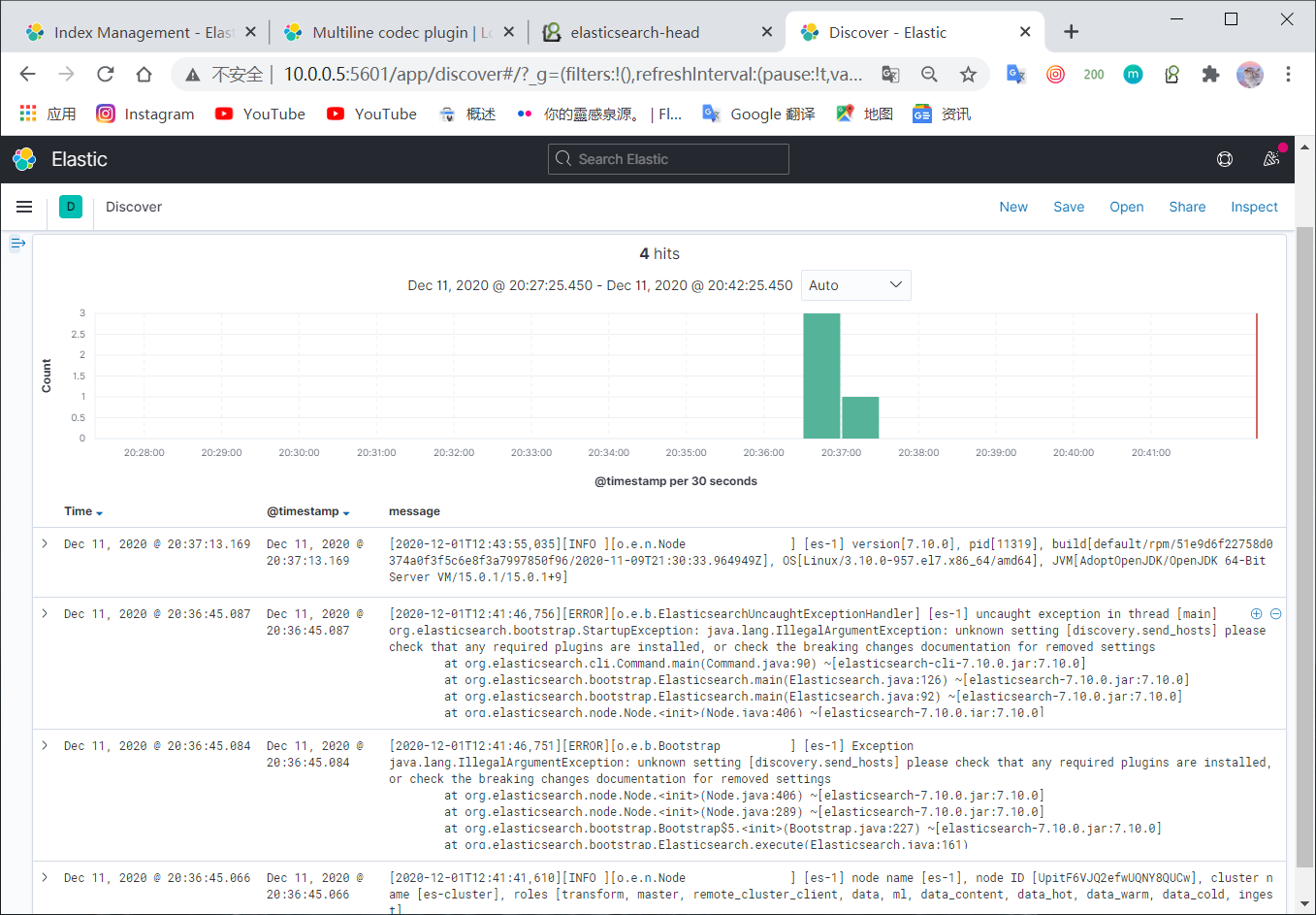

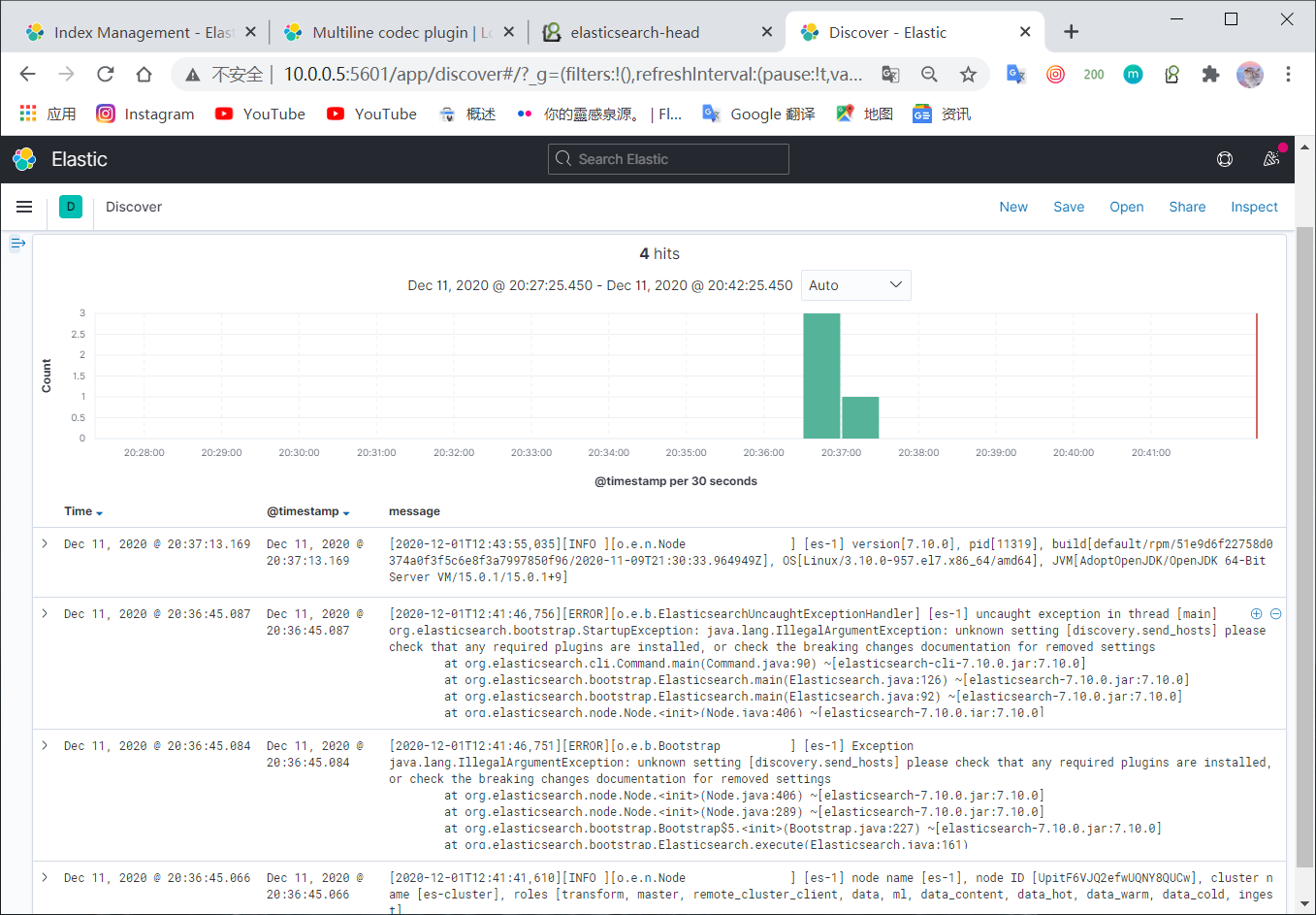

[2020-12-01T12:41:41,610][INFO ][o.e.n.Node ] [es-1] node name [es-1], node ID [UpitF6VJQ2efwUQNY8QUCw], cluster name [es-cluster], roles [transform, master, remote_cluster_client, data, ml, data_content, data_hot, data_warm, data_cold, ingest] [2020-12-01T12:41:46,751][ERROR][o.e.b.Bootstrap ] [es-1] Exception java.lang.IllegalArgumentException: unknown setting [discovery.send_hosts] please check that any required plugins are installed, or check the breaking changes documentation for removed settings at org.elasticsearch.node.Node.<init>(Node.java:406) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.node.Node.<init>(Node.java:289) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Bootstrap$5.<init>(Bootstrap.java:227) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:161) at org.elasticsearch.cli.Command.main(Command.java:90) [elasticsearch-cli-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:126) [elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:92) [elasticsearch-7.10.0.jar:7.10.0] [2020-12-01T12:41:46,756][ERROR][o.e.b.ElasticsearchUncaughtExceptionHandler] [es-1] uncaught exception in thread [main] org.elasticsearch.bootstrap.StartupException: java.lang.IllegalArgumentException: unknown setting [discovery.send_hosts] please check that any required plugins are installed, or check the breaking changes documentation for removed settings at org.elasticsearch.cli.Command.main(Command.java:90) ~[elasticsearch-cli-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:126) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:92) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.node.Node.<init>(Node.java:406) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.node.Node.<init>(Node.java:289) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Bootstrap$5.<init>(Bootstrap.java:227) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:227) ~[elasticsearch-7.10.0.jar:7.10.0] at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:393) ~[elasticsearch-7.10.0.jar:7.10.0] ... 6 more [2020-12-01T12:43:55,035][INFO ][o.e.n.Node ] [es-1] version[7.10.0], pid[11319], build[default/rpm/51e9d6f22758d0374a0f3f5c6e8f3a7997850f96/2020-11-09T21:30:33.964949Z], OS[Linux/3.10.0-957.el7.x86_64/amd64], JVM[AdoptOpenJDK/OpenJDK 64-Bit Server VM/15.0.1/15.0.1+9]

|

观察上面日志信息,可以发现每一条日志开头都是以“[”开头的,即在碰到下一次“[”开头的时候都是属于一条日志。这里就会用到了negate进行取反:

1

|

[root@es logstash]# vim-f es_test.yml input { file { path => "/tmp/es_test.log" start_position => "beginning" codec => multiline { pattern => "^\[" negate => "true" what => "previous" } } } output { elasticsearch { hosts => "10.0.0.5:9200" index => "es_test" } } [root@es logstash]# logstash -f es_test.yml

|

查看验证: